Progress Report One: AR Based Orienteering

1. Target

1.1 Configure the coding environment

Since we three need to cooperate online to work on the code, the coding manage environment should be configured to support download, upload, merge and other version control operation. We set up a web server so that all of us can get and modify the same program with the latest version.

1.2 Implement the goal of Phase One

The first step of our project is to implement the sweeper function of our orienteering system. The function is mainly based on GPS techniques. Below is the design plan in our original project proposal:

“To accurately find the control point, a ‘sweeper’ mode is deployed, which gives you the distance information between you and the target (without direction). The closer you are, the denser the ring is. This mode will give you an experience of geocaching.”

Considering that a ring can be very annoying when players walking on the street, we decide to change it to some visual feedback. A colorful bar is drawn to take place of it, showing the distance between the player and the target building. The length of the bar will increase when players are getting closer to the target, and its color will turn to red at the same time.

Some algorithms related to geolocation will be applied to work out the distance between two points with GPS coordinates.

2. How the Goal is Met

2.1 Coding environment setup

Before we started coding, a web server was set up. All of us connected our laptops to the same server so that we could merge the code whenever we updated something. Adobe Dreamweaver can well support this function and merge the code automatically if we determined to do so.

2.2 Geolocation and distance calculation

Since we need to implement the sweeper function, it is necessary to get the coordinate of the mobile device. HTML5 has the method to support geolocation. We use getCurrentPosition() to achieve the coordinate of the users. This function is applied in Argon.onRender to guarantee that user’s location keeps updating on the server. Whenever the data is updated, the distance between the mobile device and the specific target is recalculated through a mathematical method. We will provide visual feedback to users in real time.

The code can be checked on the web, I am going to attach the link in the next section.

2.3 Distance indication bar

The distance indication bar is changing to reflect the distance from the player to the next control point. The control points are registered in different locations with explicit specified longitude and latitude. The distance is then be calculated through those two points. The result distance is then passed to radar.js to draw the distance indication bar.

The scale of the bar is 0~200m. Distance that falls into this range will be reflected on the distance indication bar by the number of rectangles: the more, the closer, the less, the farther.

A separate division is specified through css file for the bar. In this division, firstly, a canvas is created for painting rectangles. The border of the canvas is drawn such that players will know how full the bar can be and then have an approximate sense of how far they need to go. Secondly, the distance is scaled and rounded up to get the number of rectangles we need to draw. The rectangle’s color is changing as the distance is decreasing. It starts with red color which gives players a tense feeling and tends to be blue, which is more relaxing.

One note of drawing stuff that goes with the camera rather than sticks to a target in the World is not to draw directly in the html. Otherwise, the drawing will push the tracked object away by a certain distance. We use div tag and css to overcome this problem.

2.4 Device orientation and map drawing

We design the device to react in the way that when it is placed vertical up, it shows the video and tracks object; while it is lie flat, it shows the map, which will help the player to navigate. To implement this function, the device’s orientation information must be given. The property ARGON.deviceAttitude returns a 4*4 matrix which can be used to calculate the device’s rotation information. By decomposing this matrix, we can identify if the device is up or not.

Then based on the previous analysis, we chose to either show the map or clear the map area. The map picture is also contained in a canvas object and positioned by a css division.

2.5 Find a specific item

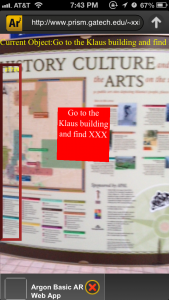

We took a picture for a poster board at TSRB and dealt with the image with Photoshop. The image quality was not quite satisfying but we could track it successfully. The board can be used as a control point. Whenever it is tracked by user’s devices, we will update a CSS text to tell them the next control point.

2.4 and 2.5 are not on the plan of our Phase One. We decide to complete them this week in order to save time for future tasks.

3. Step by step instructions

If you are within 200 meters to TSRB, the sweeper function will be activated. When you are getting close to the specific target we set, the color bar on the screen will become longer and you can see the remaining distance above the bar.

Flatwise the mobile device to check the map, and stand it up to close it.

Go and track the poster board nearby, a CSS text will be shown to tell you the next control point.

Video Link: http://www.youtube.com/watch?v=RrlL7G_WoGk

App Link: http://www.prism.gatech.edu/~xxie37/ar/aro.html

4. Work Plan in Phase Two

We have implemented “finding a specific location or item” and “sweeper function” we mentioned in our proposal (3.1.1) (3.3). In the next phase, we are going to finish all the other tasks for players at each control point, including “collecting pieces of information” (3.1.2) and “fighting monsters” (3.1.3). We will try to come up with more AR-based tasks if time is enough. The navigation system will be refined. We will make the campus map interactive and add some others tools that can help players navigate. Before the next due date, the detailed plan of the whole procedure of the orienteering game will be finished. We are going to write a script for the game. In Phase Three, the app interface will be completed. The system can update information automatically according to the progress of the orienteering. And we will run a test for this app to fix all the bugs or potential problems. Here is the schedule of Phase 2:

|

Date |

Schedule |

Note |

|

Mar 14 |

|

Meet at 3 pm |

|

Mar 21 |

|

Meet at 3 pm |

|

Mar 28 |

|

Meet at 3 pm |

5. Individual Contribution

Xueyun Zhu:

Draw the distance indication bar

Investigate device orientation and Implement map function

Design the interface of this app

Xuwen Xie:

Find out the method and algorithm to deal with geolocation

Outline the report

Bo Pang:

Set up the coding environment.

Merge all the codes together and fix bugs

Comments are closed.