ARt Explore

Final Project post by:

Andy Pruett, Anshul Bhatnagar and Mukul Sati

Overview and Lessons Learnt:

Our project was aimed at using AR to enhance the experience of viewing and interacting with large art-work such as murals and graffiti. Using natural image tracking for images of these sizes presents substantial challenges that we sought to solve, so as to allow for the creation of seamless augmented experiences.

Through the development of this project we gained an understanding of the types of interactions that are feasible/good and those that are not within our problem space. We were also exposed to some of the underlying details of tracking technology and were able to sucessfully achieve the goal that we set out to accomplish, protyping a couple of experiences that demonstrate seamlesss tracking of large images.

Problem solution co-evolution:

There is a body of work in cognitive problem-solving that talks about the problem solution co-evolution that any problem solving episode entails. In an exploratory project as ours, this impacted us greatly, and we continually refined our goals as well as our approaches.

We started with the idea of recovering lost murals, murals that were removed due to political/social reasons, thus allowing users to experience the temporal evolution of artwork at the location. This idea was adapted once we realized that there might not be enough features to track and identify the background which we are to augment with the removed artwork. GPS would fail too because of its inaccuracy and inconsistency at the scale that we are talking about. The new problem was, thus, to provide for continuous tracking of large images and artwork.

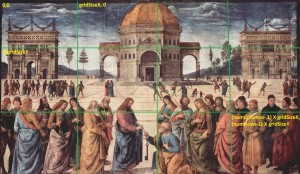

The issue with tracking large images such as murals is that the definition of features changes depending on how close or far the camera is from the target image. For example, when you are close to the mural, the features are sharp and pointed but the same features become curved or blend into the image when the camera is moved away from the target. The view might not also include sufficient features for the image to be successfully tracked. Coupled to this, Vuforia also places a limit on the file size of images that can be uploaded to the device database for tracking.

Our Approach

To solve these problem, we used a 2 step process:

- Divide the a high-res version of image into multiple grids and stitch them together to form 1 Mult-Image target.

- Use a low-res image of the target and add it to the Multi-Image target.

The first step helped us to segregate the image into sub-images, which not only helps tracking the image from close distances but also allows us to create spatially localized experiences which will be discussed later. The second step enhanced these experiences and allowed the image to be tracked from larger distances. We also developed a stand alone application that allows a user to automatically generate these sub-images and combine them to form a multi-image trackable target to assist in the creation of such experiences.

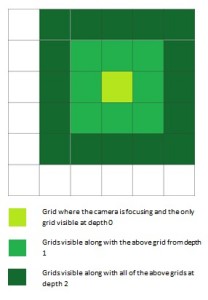

Now that we had a trackable target which works from all distances, we used the information about the image, the field of view of the iPhone camera and distance of the phone from the image and determine which all grids are visible to the user. This combined with the information about how far the user is from the tracked image allows us to recognize the user’s spatial context and what is visible to him. We use this information to retrieve the relevant content from the database. Thus we are able to recognize the Level-of-Detail that needs to be conveyed.

User Interactions

The user moves around in 3D space and using the above explained mechanisms his tracking is maintained and information about his position is explained. Now we use this information to identify his focus and level of detail and allow him to post comments or join an existing conversation.

This makes the experience more social and interactive. People can try to talk about certain localized features of the image or the entire image as a whole just by moving around in the space around them. We also experimented with in-situ display of such comment/augmentations to conserve screen real-estate:

Code, Video and Paper:

Code: https://github.com/indivisibleatom/artExplore

Video: http://youtu.be/s5taQOzyBNo

Paper: http://www.cc.gatech.edu/~msati3/veFinal.pdf

Comments are closed.