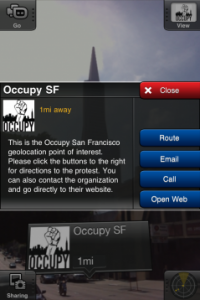

Interesting article (with video examples) of how some are using AR as a tool for social change… just in case anyone gets inspired. 🙂

Monthly Archives: January 2012

Reminder: set the “content-type” on your web program

As I pointed out in class, you need to make sure that the HTTP response you send from your server includes the correct type, so Argon interprets it correctly. To set the content type of the response in Python in App Engine, I did this:

self.response.headers[‘Content-Type’] = ‘application/vnd.google-earth.kml+xml’

AR Experience: Nokia’s Westwood Experience

We mentioned the Westwood Experience in class today. Ron Azuma provided me a link to a page with the details and video, http://ronaldazuma.com/westwood.html

Some AR Experiences (Jan 17)

Some AR projects and experiences we mentioned in class, or that I thought about during the discussion, that you may way to look at.

- The Duran Duran AR project. Probably the first AR Concert experience, possibly one of the first large-scale public uses of AR.

- The DigitalDesk, an early projector-based AR system. Done by Welner and MacKay at Xerox EuroPARC. http://video.google.com/videoplay?docid=5772530828816089246

- the various video’s in the slides and that have been posted to the resources (e.g., Uncle Roy All Around, etc)

Himango 4D Concert

AR experience does not only exist in personal screens. In fact, when augmented reality is used in a larger scale, such as stage performance, it could bring immersive experience of the mixture of virtual and reality to the audience. Here’s an example I would like to share because of its immersive experience with multiple viewers. Himango Concert, performed in National Theater of South Korea, is one of the experimental performance which combines music, dance and augmented Reality. At this show, the augmented reality technology (starting from 1:30) is combined with 4D display technology to create a striking visual of mixed reality for the audience. The virtual pattern created by AR reacts to the dancer’s elegant movements simultaneously. It flies around the dancer to create an aurora effect. Augmented adds interactivity to the performance which could give the viewers unexpected excitement.

AR Experience Report: Articulated Naturality Web

QderoPateo Communications (QPC) introduced their concept of the future as the Articulated Naturality Web (ANW). What I find most compelling about their vision for outdoor AR is the way information is seamlessly integrated into the natural world (when possible), rather than floating in front of the world. With the current state of technology, this kind of integration with the natural world is generally feasible only on a smaller scale. However, as outdoor tracking becomes more precise and 3d models of our outdoor environments become more available, this type of augmentation really makes sense for AR experiences that are meant to inform the user.

For instance, one of the biggest advantages of using natural surfaces when informing the user about their environment is the automatic correlation between semantic information and objects in the environment. This creates a more intuitive interface. Since both information about the object and the actual object are perceptually processed as one and the same, the user is freed from the burden of consciously associating one with the other. As seen in the video (2:04), a hotel with room availability portrayed as glowing rooms is a great example of how useful this concept can be.

ANW is touted by QPC as “a complete renaissance in the way we approach technology”. Although ANW as portrayed in the video is certainly compelling (after getting past all the squinting from lack of HMDs), I would argue that rather than being an entirely a new way of thinking about technology, it’s simply the next logical development for AR. For me, this video inspires me to think carefully about the purposes of a particular interface, and to keep those purposes in mind to make it intuitive. When developing an AR experience that augments the world with many layers of information, making it easy for the user to process can be challenging. In such a scenario, doing as much as possible to merge the semantics with their corresponding objects may be a solution.

Invizamals

Invizimals presents an interesting take on AR. It is a game for the play station portable where players capture, train, and battle virtual creatures, similar to pokemon, but invismals adds an intriguing AR twist. With the aid of a hardware attatchment to the PSP, players “see” the creatures as existing in the “real” world, as though the PSP where a window with which these normally invisible creatures are revealed.

What I think is most interesting about this example, is that it takes AR and makes it straightforward, understandable, and appealing enough to be a successful children’s game (with multiple sequels!). The project designers utilize AR in several ways to make for a very engaging experience. To discover new monsters, players must “find” them in their real environment, encouraging exploration of real space. In order to orient the invisimals in space, players must use fiduciary marker, but this is integrated into the game as a sort of “trapping” device. Players can use other extensions of the real world to interact with their invisimals, such as shaking their PSP to trigger an earthquake attack, or blowing into the microphone to trigger a whirlwind.

Some inspirations that I would like to draw from this project for my own are the ways Invisimals makes their AR integration so gratifying it is a fully fledged game.

VOCALOID Hatsune Miku concert

When looking around for a AR project, I remembered a youtube video I had watched of a live concert that was an animated character on stage using screens and other effects to make it look like the character was actually on the stage. This was a video of the live concert of Hatsune Miku, a character from the voice synthesizer program called VOCALOID. The way that it works is through a twist on an old illusion called Pepper’s Ghost. The image is projected from a regular projector overhead pointing down. Then it hits a thin metalized film at a 45 degree angle. The film reflects the image and is pretty much see-through without the image on it. This allows the image to look like the character is either walking or floating around on stage. It also allows the character to look 3D even though the image is actually only 2D. The reason that this was so compelling was the idea that we’re getting closer to having actual holograms that we could watch for entertainment. And it allowed a character whose voice is pretty much the only concrete thing about her to have a live concert for fans to enjoy.

Foxtrax: the Failure of the “Glowpuck”

One early example of AR being used in mainstream sports is the Foxtrax puck developed by the Fox network after they began airing National Hockey League games in 1994. The Foxtrax pucks contained electronics that emitted infrared pulses that were detected by specialized equipment in the hockey arenas, which interpreted the puck’s coordinates. Computer graphics could then be superimposed over the puck – the “glowpuck”, as it became known colloquially, appeared on one’s TV screen with a blue glow emitting from the puck. This was implemented in an effort by Fox to make the game of hockey easier to watch for casual or new fans, as focus groups had indicated that new hockey viewers had trouble following the puck. For additional style points, the puck would glow red when detected to be in excess of 70 miles per hour and leave a comet tail behind it.

While Fox reported success in attracting new viewers after implementing Foxtrax, there was a huge backlash among hockey’s more serious fanbase who saw the visual additions as pointless distractions. Many claimed that the graphics took away from the gritty nature of the game, turning it into something more akin to a video game. The technology turned into a running joke until ABC bought the NHL broadcast rights in 1998 and immediately returned to normal pucks.

While Foxtrax is often seen as a failure, I find it to be a compelling example of AR in that it did have some success in teaching newcomers to watch hockey – its failure was forcing this teaching tool upon those who didn’t need it. One can imagine a person trying to get into hockey by using a personal Foxtrax filter, and then disabling it once they had acclimated to the speed of the game. Foxtrax can serve as a positive example of designing with a specific problem in mind, but also as a negative example of considering one’s audience.

Touchable Holography

I found this after my first example, but I thought this was actually much more compelling, so I’m adding it. The inclusion of other senses besides vision to AR would almost certainly increase immersion and help to focus attention. For instance, an educational application including vision, sound and touch might be much more compelling than just text, diagrams on a board, physical models, or vision alone. I know one “standard’ application of this sort is chemistry (modeling molecules) but I’ve also heard of immersive water flow simulations and math applications in VR. I would assume those type applications could be transferred to AR (or a mix of AR/VR).

Studies have shown that attention is a large factor in memory and learning, and immersion or presence can affect attention. So, presumably, anything which increases attention would improve learning and retention. I’d be interested in pursuing immersive experiences that help students (especially, but not exclusively, special needs students) to improve the quality and quantity of their education.